British startup Synthesia announced a $200 million Series E financing round and a $4 billion valuation for its B2B AI video platform which targets the agentic future. Investors include the venture arms of Google and Nvidia.

In a press release, CEO Victor Riparbelli discussed his company’s plans:

“Synthesia was founded on two core beliefs: first, that AI will bring the cost of content creation down to zero. And secondly, that AI video provides a better, more engaging way for organizations to communicate and learn. (…)

We see a rare convergence of two major shifts: a technology shift with AI Agents becoming more capable, and a market shift where upskilling and internal knowledge sharing have become board-level priorities. We intend to build the defining company at that intersection, by combining our know-how in AI video with our ability to build and integrate AI technologies into products and services that solve real business needs.”

Read the release. (January 26)

The core product uses generative AI — beginning with avatars — which bring to life Synthesia’s other tools for training, marketing, or internal communications purposes.

See use cases on Synthesia’s website.

More: Synthesia doubles to $4B, preps AI video agents (January 26) – The Deep View

The company claims a whopping 90% market share among the Fortune 100. Back in 2023, CEO Riparbelli told CNBC that Synthesia was working with only 35% of the Fortune 100.

From tipsheet: With Synthesia-type tech, one can see the vision of advertising and marketing technology workflow being managed by an avatar — via voice — versus a text-based GUI in the future.

Amazon DSP media manager Gigi appears to be an example of this today.

LLMs & CHATBOTS

Developments

- AI as a Scientific Collaborator – From biology to black holes, ChatGPT is accelerating research (January) – OpenAI (PDF)

- Meta promotes Oakley AI glasses in Super Bowl teaser with IShowSpeed (January 26) – Ad Age (subscription)

- Your favorite work tools are now interactive inside Claude (January 26) – Anthropic

LLMs & CHATBOTS

Video citations helping YouTube

Adweek’s Trishla Ostwal reviewed generative engine optimization firm Bluefish’s citation data yesterday and found that YouTube has now overtaken social media giant Reddit as the website cited most often by large language models (LLMs) Bluefish tracked.

In a nutshell, video being converted to transcripts and text is helping YouTube win.

Ms. Ostwal reported:

“YouTube had previously fallen behind other user-generated sources because of the difficulty large-language models, or LLMs, have in pulling information from videos, but transcripts, explainers, and other information associated with videos on YouTube have allowed the video platform to flourish as a source that machines can easily read.

Bluefish found that YouTube appeared as a cited source in 16% of LLM answers over the past six months, compared with 10% for Reddit—a reversal from earlier periods when Reddit was the dominant social source.”

Related: “Bluefish debuts AI Impact and Influence Analytics…” (November 1) – Bluefish blog

From tipsheet: These citation results can’t be a surprise for Google Gemini’s LLM? It was only a matter of time until Google figured out a way to make video content in Google’s YouTube work better in an LLM, particularly its own Gemini. (They made video text!)

Directionally, this is important for marketers and publishers – YouTube and video investments should not be overlooked for citations in LLMs.

But, understanding how citations work in each LLM and its associated chatbot would be another important metric.

As of early 2024, it appeared OpenAI could not spider YouTube content.

LLMs & CHATBOTS

More OpenAI ad tidbits

OpenAI’s enterprise sales team is busy reaching out to potential customers for its ad product, according to The Information yesterday. And, for now, the company is apparently avoiding mega-deals with agency holding companies and their larger marketer clients as it tests-and-learns with its ad product.

The Information’s Ann Gehan and Catherine Perloff reported:

“OpenAI has told early advertisers that it will give them data about impressions, or how many views an ad gets, as well as how many total clicks it gets, a media buyer working with some of the advertisers said. Advertisers will get high-level insights like total ad views…”

As reported last week by Ad Age, OpenAI is seeking a $60 CPM.

Evertune AI CEO and co-founder Brian Stempeck told The Information: “The most important thing that ChatGPT has to build next year is the connection to the lower funnel—the connection to the sale.”

Read: OpenAI Seeks Premium Prices in Early Ads Push (January 26) – The Information (subscription)

From tipsheet: At the outset, the OpenAI ad opportunity is all about contextual ad targeting and the magic of an ad placement within the conversational content created between the user and the chatbot (and the LLM behind it).

Considering consumers’ time spent in the chatbot, audience-based retargeting via programmatic channels would seem inevitable. OpenAI is aiming to balance trust with the consumer (ad supported versions of ChatGPT will be the free or “Go” versions) and the company’s need to pay for AI’s extreme costs.

LLMs & CHATBOTS

Agents are taking over coding

Yesterday afternoon on X, OpenAI co-founder Andrej Karpathy made an observation regarding AI coding capabilities that likely impacts every industry including advertising and marketing.

He wrote, in part:

“Given the latest lift in LLM coding capability, like many others I rapidly went from about 80% manual+autocomplete coding and 20% agents in November to 80% agent coding and 20% edits+touchups in December. (…) This is easily the biggest change to my basic coding workflow in ~2 decades of programming and it happened over the course of a few weeks. I’d expect something similar to be happening to well into double digit percent of engineers out there, while the awareness of it in the general population feels well into low single digit percent.”

Mr. Karpathy concluded:

“LLM agent capabilities (Claude & Codex especially) have crossed some kind of threshold of coherence around December 2025 and caused a phase shift in software engineering and closely related. The intelligence part suddenly feels quite a bit ahead of all the rest of it – integrations (tools, knowledge), the necessity for new organizational workflows, processes, diffusion more generally. 2026 is going to be a high energy year as the industry metabolizes the new capability.”

Read his entire post on X. (January 26)

From tipsheet: The speed at which AI development is moving is surprising even the experts.

PROTOCOLS

Opinion: AdCP has its place but…

Co-founder and chief science officer Aaron Andalman of contextual ad targeting firm Cognitiv reviewed Ad Context Protocol (AdCP) in an op-ed on AdExchanger yesterday.

Titled, “The AdCP Hype Problem: Why Standardized AI Workflows Don’t Equal Better Media Outcomes, Mr. Handalman sees potential but… :

“AdCP should stay on your radar. It is a sensible standard, and, over time, it may make AI-driven workflows easier to build. But it should not be the primary focus.

If your goal is performance, the more important question is not how an AI chatbot invokes actions. For the foreseeable future, performance will come from platforms using AI to improve targeting, prediction and optimization at the core of programmatic buying. This is work advertisers will need to find on their platforms or build themselves.”

Read more in AdExchanger. (January 26)

Related: Inside the debate over agentic advertising and standards (January 26) – Digiday (subscription)

From tipsheet: AdCP versus IAB Tech’s Labs Agentic Real-Time Framework (ARTF) continues to be a top industry conversation among companies and startups trying to understand how to get ahead of the agentic trend.

Mr. Andalman delivers a thoughtful argument and his company, Cognitiv, undoubtedly aims to be one of those platforms using AI to improve targeting that he describes.

RETAIL MEDIA

Ads in a retailer’s chatbot and Criteo

On LinkedIn, Criteo CEO Michael Komasinski previews an article written by Criteo President of Retail Media, Sherry Smith, about the agentic commerce future.

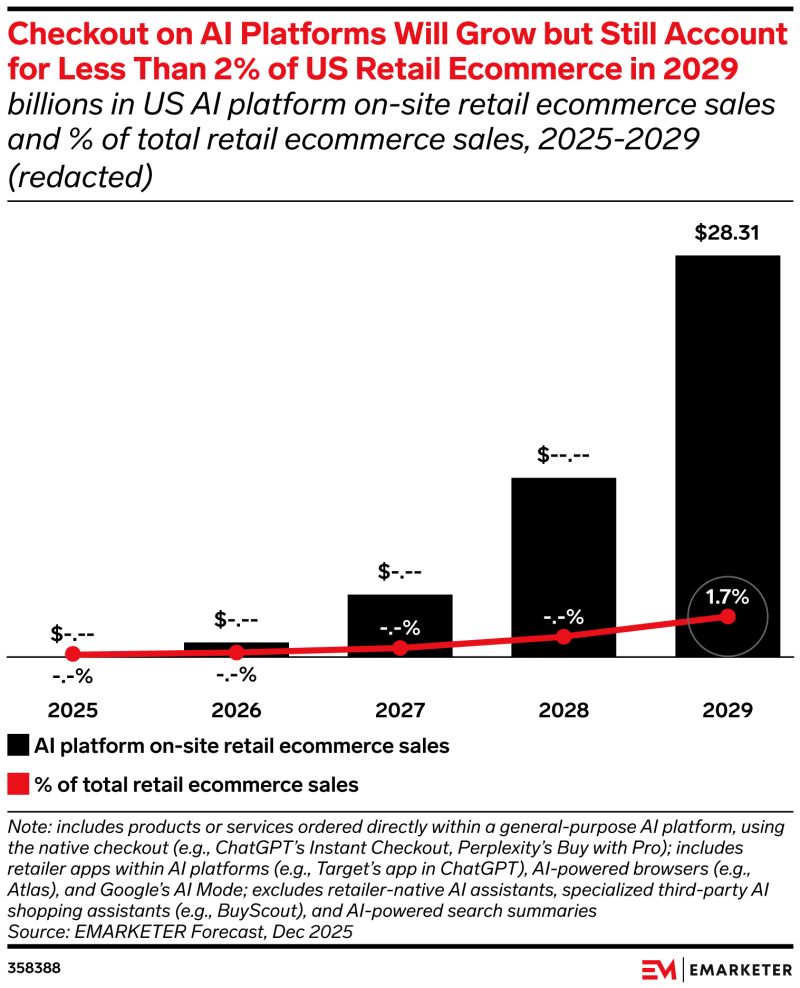

Komasinski flashes an eMarketer forecast graphic on AI checkout (below) and explains that commerce isn’t ALL migrating away to AI chatbots such as ChatGPT.

He adds:

“As commerce becomes more conversational, what matters most is the data spine behind it, meaning real purchase signals, product intelligence, closed-loop measurement, and scale. This is what turns AI-driven intent into real outcomes, and it’s where retailers have a unique advantage.”

Read more on LinkedIn. (January 26)

More: “Why retail media rises in an agentic commerce era” (January 26) – Sherry Smith on Criteo’s company blog

Related: “Agentic commerce is emerging, just not the way most people expect” (December 16) – Michael Komasinski on Criteo’s blog

From tipsheet: Make sure to see the “Criteo-powered conversational shopping experience” that the company is offering to retailers and embedded in the post.

Criteo is creating “ads in a chatbot” for retailers’ websites.

LLMs & CHATBOTS

LLMs, small models and user intent

In an effort to optimize interaction with large language models, Google researchers announced a new approach which extracts “user intents from UI interaction trajectories using small models, which shows better results than significantly larger models.”

Search Engine Land’s Danny Goodwin breaks down the essentials of the researchers’ paper:

“Google’s solution is to split the task into two simple steps that small, on-device models can handle well.

Step one: Each screen interaction is summarized separately. The system records what was on the screen, what the user did, and a tentative guess about why they did it.

Step two: Another small model reviews only the factual parts of those summaries. It ignores the guesses and produces one short statement that explains the user’s overall goal for the session. By keeping each step focused, the system avoids a common failure mode of small models: breaking down when asked to reason over long, messy histories all at once.”

Read more in Search Engine Land. (January 26)

More:

- Blog post: “Small models, big results: Achieving superior intent extraction through decomposition” (January 22) – Google Research blog

- Paper: “Small Models, Big Results: Achieving Superior Intent Extraction through Decomposition” (November 2025) – ACL Anthology

From tipsheet: By using smaller models to divine intent, interactions are faster, less expensive and perhaps privacy-safe in that they could be hosted on the user’s device.

SELL-SIDE

Publishers segmenting for AI

Alex Brownstein of advisory firm 3C Ventures looks at publisher Arena Group (Sports Illustrated, Men’s Journal, among others) and how it’s proprietary AI platform known as Encore targets three audiences identified as Drive-Bys, Casuals and Loyalists.

Mr. Brownstein explained, “The company’s segmentation runs on AI systems, but humans set the rules. Encore identifies patterns — ‘This person reads finance content, visits on weekends, scrolls on his phone’ — and the Arena team decides what to do with that information.”

Read Marketecture. (January 26)

More: “For Publishers, AI Gives Monetizable Data Insight But Takes Away Traffic” (November 7) – AdExchanger

PEOPLE MOVES

Now hiring

-

Former LoopMe executive Bruce Bell joins CTV demand-side platform JamLoop as SVP, Head of Sales (January 26) – LinkedIn

MORE

- Trust is the New Differentiator in Identity – Keith Petri, SVP, Viant on his personal blog

- AI has changed holiday shopping: Here’s what the numbers say (January 23) – Marketing Dive

- TikTok Is Now Collecting Even More Data About Its Users. Here Are the 3 Biggest Changes – Wired

- Airpost Launches AI Ad Engine With a Twist: People (January 20) – press release

- How WPP is using AI to accelerate the production cycle (December 2025) – Think With Google.